Super Computing is future for the cloud infrastructure. The order to meet the daily computing needs has skyrocketed in the last few years due to the fiasco of the big data, The more processing power is required in order to meet our requirements, there is development in the term called super computer these so-called super computers will solve our processing power needs

Here is the list of the Top 10 super computers in the world.

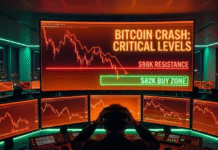

Tianhe-2

It is the world’s fastest supercomputer according to the TOP500 lists for June 2013, November 2013, June 2014, November 2014, June 2015, and November 2015. Plans of the Sun Yat-sen University in collaboration with Guangzhou district and city administration to double its computing capacities were stopped by a US government rejection of Intel’s application for an export license for the CPUs and coprocessor boards. The Wall Street Journal analysts considered this a blow to Intel and their suppliers sales and a drag to US information technologydevelopment, but concurrently a boost for China’s own processor development and production industry.

Specifications of Tianhe-2

According to NUDT, Tianhe-2 would have been used for simulation, analysis, and government security applications.

With 16,000 computer nodes, each comprising two Intel Ivy Bridge Xeon processors and three Xeon Phi coprocessor chips, it represented the world’s largest installation of Ivy Bridge and Xeon Phi chips, counting a total of 3,120,000 cores. Each of the 16,000 nodes possessed 88 gigabytes of memory (64 used by the Ivy Bridge processors, and 8 gigabytes for each of the Xeon Phi processors). The total CPU plus coprocessor memory was 1,375 TiB (approximately 1.34 PiB).

During the testing phase, Tianhe-2 was laid out in a non-optimal confined space. When assembled at its final location, the system will have had a theoretical peak performance of 54.9 petaflops. At peak power consumption, the system itself would have drawn 17.6 megawatts of power. Including external cooling, the system drew an aggregate of 24 megawatts. The completed computer complex would have occupied 720 square meters of space.

The front-end system consisted of 4096 Galaxy FT-1500 CPUs, a SPARC derivative designed and built by NUDT. Each FT-1500 has 16 cores and a 1.8 GHz clock frequency. The chip has a performance of 144 gigaflops and runs on 65 watts. The interconnect, called the TH Express-2, designed by NUDT, utilized a fat tree topology with 13 switches each of 576 ports.

Tianhe-2 ran on Kylin Linux, a version of the operating system developed by NUDT. Resource management is based on Slurm Workload Manager.

TiTan

Titan is a supercomputer built by Cray at Oak Ridge National Laboratory for use in a variety of science projects. Titan is an upgrade of Jaguar, a previous supercomputer at Oak Ridge, that uses graphics processing units (GPUs) in addition to conventional central processing units (CPUs). Titan is the first such hybrid to perform over 10 petaFLOPS. The upgrade began in October 2011, commenced stability testing in October 2012 and it became available to researchers in early 2013. The initial cost of the upgrade was US$60 million, funded primarily by the United States Department of Energy.

Titan is due to be eclipsed at Oak Ridge by Summit in 2018, which is being built by IBM and features fewer nodes with much greater GPU capability per node as well as local per-node non-volatile caching of file data from the system’s parallel file system.

Titan employs AMD Opteron CPUs in conjunction with Nvidia Tesla GPUs to improve energy efficiency while providing an order of magnitude increase in computational power over Jaguar. It uses 18,688 CPUs paired with an equal number of GPUs to perform at a theoretical peak of 27 petaFLOPS; in the LINPACK benchmark used to rank supercomputers’ speed, it performed at 17.59 petaFLOPS. This was enough to take first place in the November 2012 list by the TOP500 organization, but Tianhe-2 overtook it on the June 2013 list.

Titan is available for any scientific purpose; access depends on the importance of the project and its potential to exploit the hybrid architecture. Any selected code must also be executable on other supercomputers to avoid sole dependence on Titan. Six vanguard codes were the first selected. They dealt mostly with molecular scale physics or climate models, while 25 others queued behind them. The inclusion of GPUs compelled authors to alter their codes. The modifications typically increased the degree of parallelism, given that GPUs offer many more simultaneous threads than CPUs. The changes often yield greater performance even on CPU-only machines.

Titan uses Jaguar’s 200 cabinets, covering 404 square meters (4,352 ft2), with replaced internals and upgraded networking.Reusing Jaguar’s power and cooling systems saved approximately $20 million. Power is provided to each cabinet at three-phase 480 V. This requires thinner cables than the US standard 208 V, saving $1 million in copper. At its peak, Titan draws 8.2 MW, 1.2 MW more than Jaguar, but runs almost ten times as fast in terms of floating point calculations. In the event of a power failure, carbon fiber flywheel power storage can keep the networking and storage infrastructure running for up to 16 seconds. After 2 seconds without power, diesel generators fire up, taking approximately 7 seconds to reach full power. They can provide power indefinitely. The generators are designed only to keep the networking and storage components powered so that a reboot is much quicker; the generators are not capable of powering the processing infrastructure.

Titan has 18,688 nodes (4 nodes per blade, 24 blades per cabinet), each containing a 16-core AMD Opteron 6274 CPU with 32 GB of DDR3 ECC memory and an Nvidia Tesla K20X GPU with 6 GB GDDR5 ECC memory. There are a total of 299,008 processor cores, and a total of 693.6 TiB of CPU and GPU RAM.

Initially, Titan used Jaguar’s 10 PB of Lustre storage with a transfer speed of 240 GB/s, but in April 2013, the storage was upgraded to 40 PB with a transfer rate of 1.4 TB/s.GPUs were selected for their vastly higher parallel processing efficiency over CPUs. Although the GPUs have a slower clock speed than the CPUs, each GPU contains 2,688 CUDA cores at 732 MHz, resulting in a faster overall system. Consequently, the CPUs’ cores are used to allocate tasks to the GPUs rather than directly processing the data as in conventional supercomputers.

Titan runs the Cray Linux Environment, a full version of Linux on the login nodes that users directly access, but a smaller, more efficient version on the compute nodes.

Titan’s components are air-cooled by heat sinks, but the air is chilled before being pumped through the cabinets. Fan noise is so loud that hearing protection is required for people spending more than 15 minutes in the machine room. The system has a cooling capacity of 23.2 MW (6600 tons) and works by chilling water to 5.5 °C (42 °F), which in turn cools recirculated air.

Researchers also have access to EVEREST (Exploratory Visualization Environment for Research and Technology) to better understand the data that Titan outputs. EVEREST is a visualization room with a 10 by 3 meter (33 by 10 ft) screen and a smaller, secondary screen. The screens are 37 and 33 megapixels respectively with stereoscopic 3D capability.

IBM Sequoia

IBM Sequoia is a petascale Blue Gene/Q supercomputer constructed by IBM for the National Nuclear Security Administration as part of the Advanced Simulation and Computing Program (ASC). It was delivered to the Lawrence Livermore National Laboratory (LLNL) in 2011 and was fully deployed in June 2012.

On June 14, 2012, the TOP500 Project Committee announced that Sequoia replaced the K computer as the world’s fastest supercomputer, with a LINPACK performance of 16.32 petaflops, 55% faster than the K computer’s 10.51 petaflops, having 123% more cores than the K computer’s 705,024 cores. Sequoia is also more energy efficient, as it consumes 7.9 MW, 37% less than the K computer’s 12.6 MW.

As of June 17, 2013, Sequoia had dropped to #3 on the TOP500 ranking, behind Tianhe-2 and Titan.[5] It is still #3 on the TOP500 ranking of November 2014.

Record-breaking science applications have been run on Sequoia, the first to cross 10 petaflops of sustained performance. The cosmology simulation framework HACC achieved almost 14 petaflops with a 3.6 trillion particle benchmark run, while the Cardioid code, which models the electrophysiology of the human heart, achieved nearly 12 petaflops with a near real-time simulation.

The entire supercomputer runs on Linux, with CNK running on over 98,000 nodes, and Red Hat Enterprise Linux running on 768 I/O nodes that are connected to the Lustre filesystem.

K computer

The K computer – named for the Japanese word “kei” (京?), meaning 10 quadrillion. It is a supercomputer manufactured by Fujitsu, currently installed at the RIKEN Advanced Institute for Computational Science campus in Kobe, Japan. The K computer is based on a distributed memory architecture with over 80,000 computer nodes. It is used for a variety of applications, including climate research, disaster prevention and medical research. The K computer’s operating system is based on the Linux kernel, with additional drivers designed to make use of the computer’s hardware.

In June 2011, TOP500 ranked K the world’s fastest supercomputer, with a computation speed of over 8 petaflops, and in November 2011, K became the first computer to top 10 petaflops. It had originally been slated for completion in June 2012. In June 2012, K was superseded as the world’s fastest supercomputer by the American IBM Sequoia and as of November 2015, K is the world’s fourth-fastest computer.

IBM Mira

Mira is a petascale Blue Gene/Q supercomputer. As of June 2013, it is listed on TOP500 as the fifth-fastest supercomputer in the world. It has a performance of 8.59 petaflops (LINPACK) and consumes 3.9 MW.[3] The supercomputer was constructed by IBM for Argonne National Laboratory’s Argonne Leadership Computing Facility with the support of the United States Department of Energy, and partially funded by the National Science Foundation. Mira will be used for scientific research, including studies in the fields of material science, climatology, seismology, and computational chemistry. The supercomputer is being utilized initially for sixteen projects, selected by the Department of Energy.

The Argonne Leadership Computing Facility, which commissioned the supercomputer, was established by the America COMPETES Act, signed by President Bush in 2007, and President Obama in 2011. The United States’ emphasis on supercomputing has been seen as a response to China’s progress in the field. China’s Tianhe-1A, located at the Tianjin National Supercomputer Center, was ranked the most powerful supercomputer in the world from October 2010 to June 2011. Mira is, along with IBM Sequoia and Blue Waters, one of three American petascale supercomputers deployed in 2012.

Mira supercomputer at Argonne National Laboratory

The cost for building Mira has not been released by IBM. Early reports estimated that construction would cost US$50 million, and Argonne National Laboratory announced that Mira was bought using money from a grant of US$180 million. In a press release, IBM marketed the supercomputer’s speed, claiming that “if every man, woman and child in the United States performed one calculation each second, it would take them almost a year to do as many calculations as Mira will do in one second”.

Trinity

he NNSA Office of Advanced Simulation and Computing (ASC) is faced with significant challenges by ongoing technology advancements and must continue to meet the mission needs of the current applications while also adapting to computing technology revolutionary and evolutionary changes. ASC recognizes that the simulation environment of the future will be transformed with new computing architectures and new programming models and has established the development and deployment of a series of Advanced Technology (AT) systems. The ASC roadmap states “work in this timeframe will establish the technological foundation to build toward exascale computing environments, which predictive capability may demand.” It is critical for ASC to both explore the rapidly changing technology of future systems and to provide platforms with more capability and higher performance for predictive capability. Trinity is the first instantiation of an AT system and will achieve a balance between usability of the current simulation codes while also allowing adaptation to new computing technologies and programming methodologies.

The Trinity supercomputer is provided by Cray, Inc. and is based on its XC30 platform architecture. Trinity is a mixture of Intel Haswell and Knights Landing (KNL) processors. The Haswell partition provides a natural transition path for many of the legacy codes running on the Cielo supercomputer, Trinity’s predecessor. In order to effectively use the KNL processor to its full potential, the ASC code teams to must expose higher levels of thread- and vector-level parallelism than has been necessary for the traditional multicore architectures. To help facilitate this transition, the Trinity Center of Excellence was established, with staff from the ASC tri-Labs, Cray, and Intel.

Trinity introduces tightly integrated nonvolatile “burst buffer” storage capabilities. Embedded within the high-speed fabric are nodes with attached solid-state disk drives. The burst buffer capability will allow for accelerated checkpoint/restart performance and relieve much of the pressure normally loaded on the back-end storage arrays. In addition, the burst buffer will support novel new workload management strategies such as in-situ analysis, which opens a whole space in which projects can manage their overall workflows.

Trinity also introduces advanced power management functionality that allows monitoring and control of power consumption at the system, application, and component levels. Although advanced power management is not needed for the current power and operational budget, its functionality is being used to gain a better understanding for future system requirements and features.

Trinity High-level Technical Specifications |

|

|---|---|

Operational Lifetime |

2015 to 2020 |

Capability |

8x to 12x improvement over Cielo in fidelity, physics, and performance capabilities |

Architecture |

Cray XC30 |

Memory capacity |

>2 PB of DDR4 DRAM |

Peak performance |

>40 PF |

Number of compute nodes |

>19,000 |

Processor architecture |

Intel Haswell & Knights Landing |

Parallel file system capacity (usable) |

>80 PB |

Parallel file system bandwidth (sustained) |

1.45 TB/s |

Burst buffer storage capacity (usable) |

3.7 PB |

Burst buffer bandwidth (sustained) |

3.3 TB/s |

Footprint |

<5,200 sq ft |

Power requirement |

<10 MW |

piz daint

CSCS organized March 24-27 a 4-day training course on “Piz Daint” CSCS hybrid Cray XC30 system. “Piz Daint” has 5.272 compute nodes (with Intel® Xeon® E5-2670 and NVIDIA® Tesla® K20X) and a peak performance of 7.8 Petaflops. The presentations have being given by experts from Cray, NVIDIA and Allinea.

hazel hen

The High Performance Computing Center Stuttgart (HLRS), member of the Gauss Centre for Supercomputing, today reported completion of the second upgrade of its supercomputing-installation. The Cray (NASDAQ: CRAY) XC40 system at HLRS – code named “Hazel Hen” – delivers a peak performance of 7.42 Petaflops, almost twice as much as the previous system known as Hornet. Hazel Hen marks the final expansion stage as defined in HLRS’s system roadmap and is now officially open for operation and available to support the national and European scientific and industrial users.

Hazel Hen is powered by the latest Intel Xeon processor technologies and the CRAY Aries Interconnect technology leveraging the Dragonfly network topology. The installation encompasses 41 system cabinets hosting 7,712 compute notes with a total of 185,088 Intel Haswell E5-2680 v3 compute cores. Hazel Hen features 965 Terabyte of Main Memory and a total of 11 Petabyte of storage capacity spread over 32 additional cabinets hosting more than 8,300 disk drives which can be accessed with an input-/output rate of more than 350 Gigabyte per second.

As with its previous installation, HLRS had Hazel Hen vigorously tested the new systems prior to declaring it “up and running”. Scientists of the Institute of Aerodynamics (AIA) at the RWTH Aachen University leveraged HLRS’s new HPC platform for studies within the scope of the special research project (Sonderforschungsprojekt) SFB/TransRegio 129/Oxyflame which aims at reducing the emission of CO2 by conventional coal-fired power plants through oxy-fuel combustion.

Simulating the heating processes of coal dust, the scientists aimed at gaining a better understanding about the conditions causing the carbon dust to ignite in an oxygen-carbon dioxide atmosphere. Calculations of such scenarios are extremely complex since carbon particles are of irregular, non-spherical shape which is why their motion is difficult to predict. Hazel Hen allows for the simulation of thousands of fully dissolved carbon particles moving freely in a turbulent flow. “Thanks to the computing capacities offered by Hazel Hen, we are able to execute calculations with particle numbers of a magnitude that up to now would have required several individual simulation steps,” explains principal investigator Dr. Matthias Meinke of the AIA.

Researchers of the Institute for Applied Materials (IAM) of the Karlsruhe Institute for Technology (KIT) and of the Institute of Materials and Processes (IMP) of the Karlsruhe University of Applied Sciences are leveraging the computing capacity of Hazel Hen for numerical simulations of solidification processes using the phase-field method. They simulated the ternary eutectic directional solidification of Al-Ag-Cu (Aluminum – Silver – Copper) in an area of 4116 x 4008 x 1000 cells with the aim to study the resulting patterns and the 3D-development of the micro structure. Using 171,696 compute cores of Hazel Hen, the researchers used the computing capacity of the new HLRS supercomputer almost to its full extent. Ternary super-alloys with defined properties for high-performance materials are e.g. of growing importance in the aerospace industry. A solid understanding of the material- and process parameters of the solidifying process is thus indispensable for which simulations like the ones executed on Hazel Hen provide valuable insight.

“With Hazel Hen, we again are in the favourable position of being able to offer our users a state-of-the-art HPC system that meets their requirements,” explains Professor Dr.-Ing. Michael M. Resch, Director of the HLRS. “The first user projects already did deliver outstanding results and we are confident for Hazel Hen to achieve further simulation high- lights in the future.”

With the installation of Hazel Hen, HLRS completed the last step of its system roadmap as defined with the current purchasing plan by the German Federal Ministry of Education and Research and the federal states of Baden-Württemberg, Bavaria and North Rhine- Westphalia. This purchasing plan specified the step-by-step installation and expansion of Tier-0 HPC systems at the three national German high-performance computing centres in Stuttgart (HLRS), Garching near Munich (Leibniz Supercomputing Centre/LRZ) and in Jülich (Jülich Supercomputing Centre/JSC) to ensure Germany’s competitiveness in the global HPC arena.

The Gauss Centre for Supercomputing (GCS) combines the three national supercomputing centres HLRS (High Performance Computing Center Stuttgart), JSC (Jülich Supercomputing Centre), and LRZ (Leibniz Supercomputing Centre, Garching near Munich) into Germany’s Tier-0 supercomputing institution. Concertedly, the three centres provide the largest and most powerful supercomputing infrastructure in all of Europe to serve a wide range of industrial and research activities in various disciplines.

Shaheen 2

Shaheen consists primarily of a 16-rack IBM Blue Gene/P supercomputer owned and operated by King Abdullah University of Science and Technology (KAUST). Built in partnership with IBM, Shaheen is intended to enable KAUST Faculty and Partners to research both large- and small-scale projects, from inception to realization.

Shaheen, named after the Peregrine Falcon, was the largest and most powerful supercomputer in the Middle East and is intended to grow into a petascale facility by the year 2011, Originally built at IBM’s Thomas J. Watson Research Center in Yorktown Heights, New York, Shaheen was moved to KAUST in mid-2009.

The father of Shaheen is Majid Alghaslan, KAUST’s founding interim chief information officer and the University’s leader in the acquisition, design, and development of the Shaheen supercomputer. Majid was part of the executive founding team for the University and the person who also named the machine

Shaheen includes the following functional elements:

16 racks of Blue Gene/P, having a peak performance of 222 Teraflops

164 IBM IBM System x 3550 Xeon nodes, having a peak performance of 12 Teraflops

Performance

Shaheen’s performance and computing capabilities include:

65,536 independent processing cores.

A next generation data center that is able to scale to exascale computing requirements

10 Gbit/s access to world’s academic and research networks.

The file system and tape drive will be mounted across both the Blue Gene system and the Linux cluster. All elements of the system will be connected together on a common network backbone that is accessible from all campus buildings. The systems will also be accessible from the Internet.

Services

The Shaheen system at KAUST Supercomputing Laboratory (KSL) is available to help KAUST users and projects, to provide training and advice, to develop and deploy applications, to provide consultation on best practices and to provide collaboration support as needed.

KAUST Faculty will have access to:

General support for Shaheen facility use, including usage scheduling of Shaheen and peripheral systems

High-performance computing support for “Grand Challenges” by collaboration with the Center to deliver fundamental breakthroughs in specific areas of research

Collaboration to provide high-performance computing applications, middleware, library, algorithm support and enablement services

Applications Enablement where users can task the CDCR to develop, enable, port and scale key applications

High-performance Computing Program Best Practice Management techniques

Participation with KAUST researchers in external projects

Training on high-performance computing systems management, programming, applications tuning and algorithms

Development of missiles land-land

Future Plans

On Monday 17th November 2014 KAUST announced the successor to the Blue Gene/P system that was installed in June 2009. Cray will provide KAUST with a Cray® XC40™ supercomputer with DataWarp™ technology, a Cray® Sonexion® 2000 storage system, a Cray Tiered Adaptive Storage (TAS) system and a Cray® Urika-GD™ graph analytics appliance. The Cray XC40 system at KAUST, with the project name “Shaheen II,” will be 25 times more powerful than its current system. KAUST will significantly augment its world-class academic and research facilities and capabilities to advance scientific discoveries.

Stampede

Stampede is one of the most powerful supercomputers in the world. But, what does this mean and why is it important?

Supercomputers complement scientific theory and observation by modeling and analyzing anything that is too large (planets), too small (drug molecules), or too expensive or dangerous (crash tests for cars) to test in the laboratory. Determining where and when earthquakes will strike; exploring which nanomaterials will convert sunlight into energy; and understanding how fast brain tumors grow — these important and complex societal problems require powerful computers like Stampede, which provides a peak performance of nearly 10 petaflops (PF), or nearly 10 quadrillion math operations per second.

Stampede is an important part of NSF’s portfolio for advanced computing infrastructure enabling cutting-edge foundational research for computational and data-intensive science and engineering. Society’s ability to address today’s global challenges depends on advancing cyberinfrastructure.

FARNAM JAHANIAN, HEAD OF NSF’S DIRECTORATE FOR COMPUTER AND INFORMATION SCIENCE AND ENGINEERING

TOTAL PEAK PERFORMANCE

1 petaflop (PF) = 1 quadrillion math operations per second

The most powerful systems in the world are petascale systems, where a massive number of computers work in parallel to solve the same problem. Given the current speed of progress, industry experts estimate that supercomputers will reach one exaflop (one quintillion operations per second) by 2018.